Our search engine (RICE) has the ability to detect and suggest corrections for spelling errors. It is designed to assist our users in finding what they want quickly and helping people (like me) who have difficulty spelling. It was a feature which provided some difficulty to implement, taking two iterations before being useful enough to deploy.

Spell checking is a surprisingly simple process. A set of correctly spelt words provides an authoritative dictionary against which inputs are checked. If the input word exists within the dictionary then the word is correctly spelt. Otherwise the word is incorrectly spelt and corrections are suggested.

Suggestions are picked using phonetic algorithms, for example: double metaphone, these algorithms identify words with the same sound. This produces a list of words which sound similar to the input but are correct.

We use the Jazzy library to do this, Jazzy is a Java implementation of GNU Aspell. To quote the GNU Aspell manual:

The magic behind [Aspell] comes from merging Lawrence Philips excellent metaphone algorithm and Ispell's near miss strategy which is inserting a space or hyphen, interchanging two adjacent letters, changing one letter, deleting a letter, or adding a letter.

With Jazzy checking a single word is simple. However people don't search for single words, they enter phrases. The search engine has to be clever and process the user input into a series of words. In fact the search engine has its own query language.

The search engine needs to be able to understand the query, this is done by breaking the query into a tree of tokens. This provides semantic structure to the query allowing the search engine to work out the words within a query.

Once each word is isolated it is spell checked, with a list of corrected words being associated to each mistake. The search engine also performs additional processing of each token including: stemming and stopword analysis.

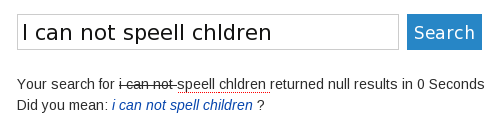

Spelling corrections are indicated back to the users within the user interface underlined in red (see below). Stop words are indicated with a strike-through as they will not be used within the search.

Currently the search engine does not automatically search on corrections, instead it offers the user the corrected query to search on.

One of the biggest challenges was constructing a good dictionary. The initial version used the website content as a dictionary, the indexer built a list off unique words as it indexed the site. This had two major issues. There were a large number of unique words needed to be loaded into memory when the search engine started up, this was rather slow.

Secondly, there were too many spelling errors within the website content, leading to incorrect spell checking and worse; suggesting that a correctly spelt word should be spelt incorrectly.

The second attempt, used the standard English dictionary. This provided a good starting point but lacked the localised Shropshire words, mainly place names. An export of town, locality and street names from the National Land and Property Gazetteer (NLPG) was added.

This formed a good dictionary which now underpins the spell checking available on our website.